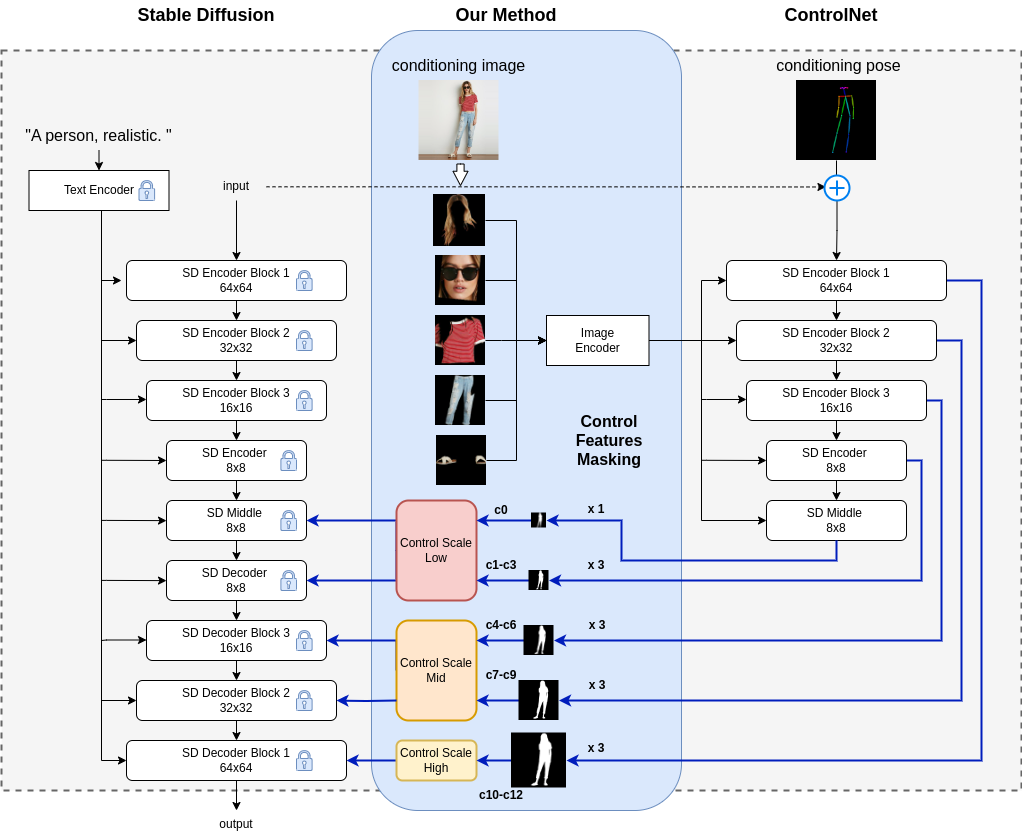

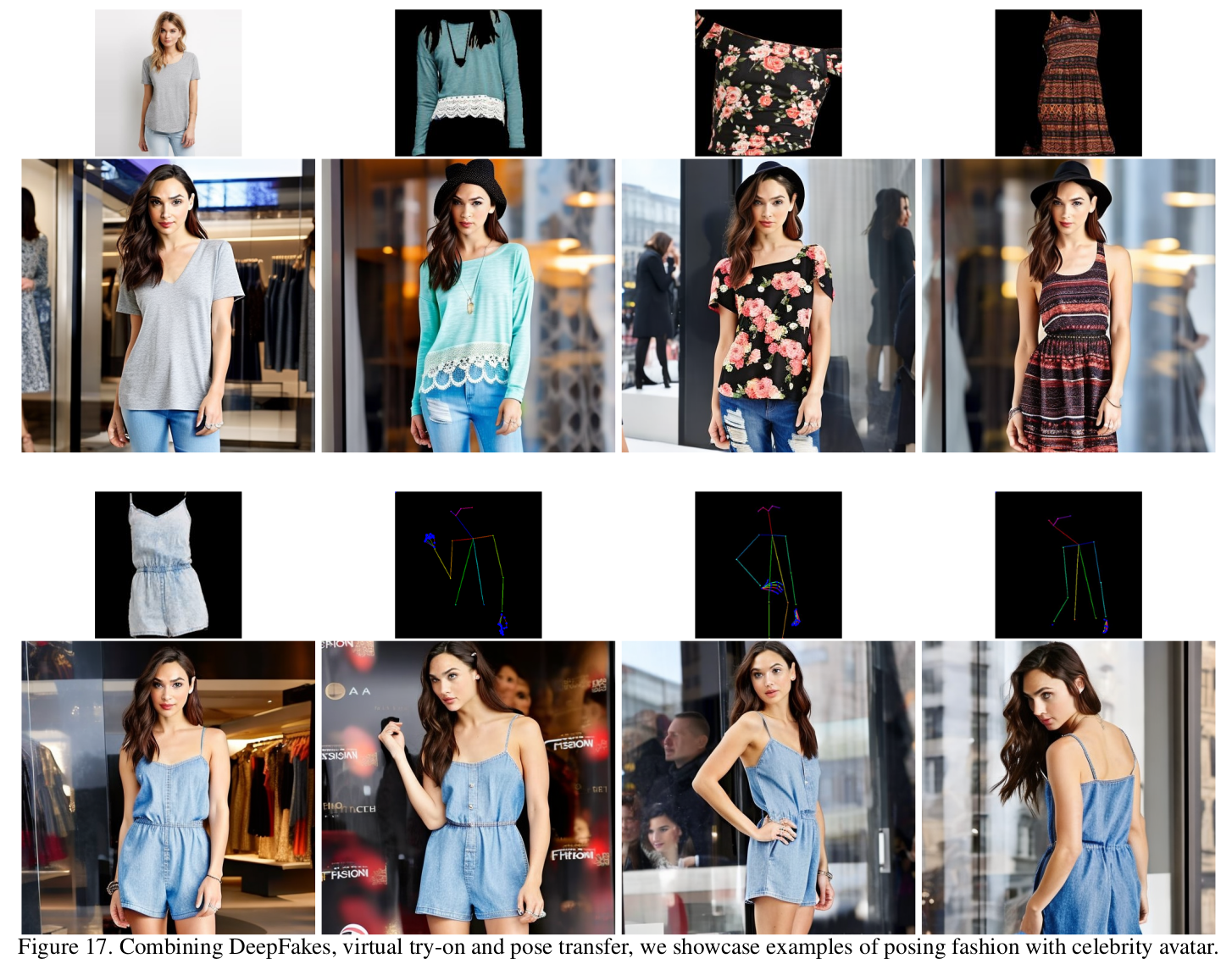

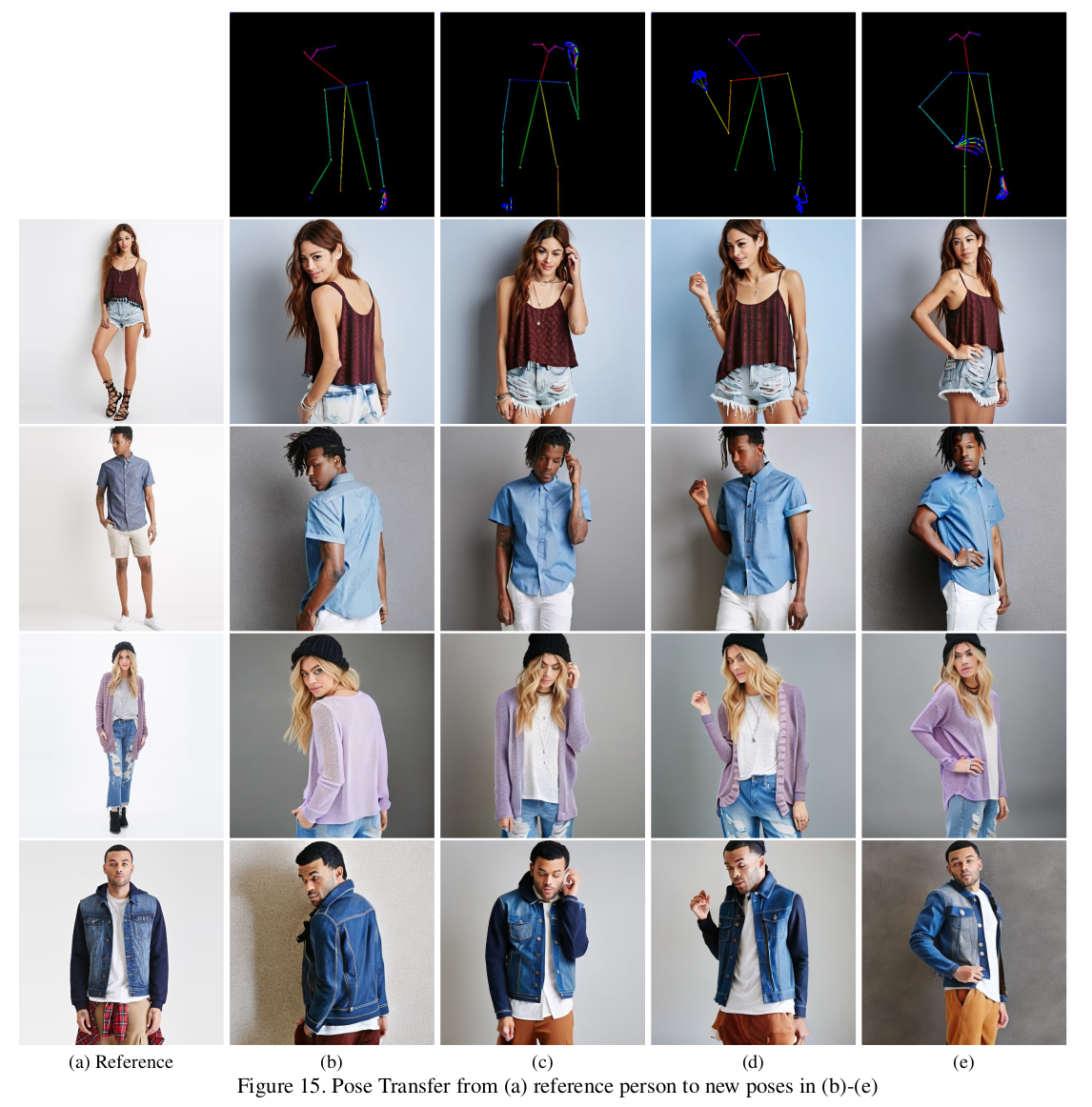

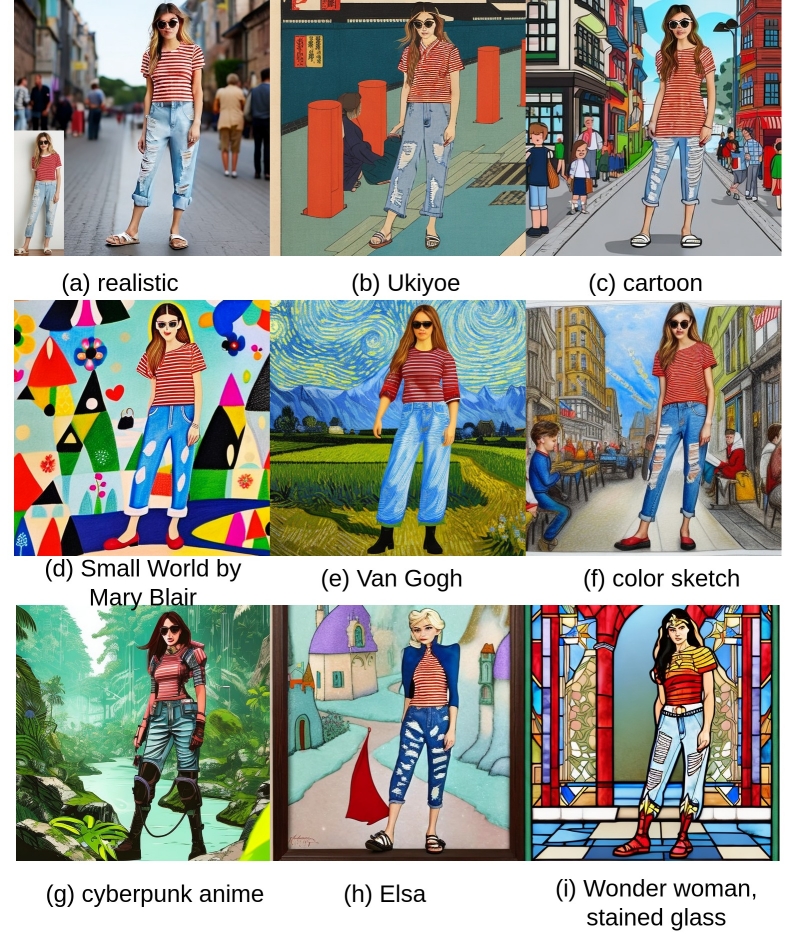

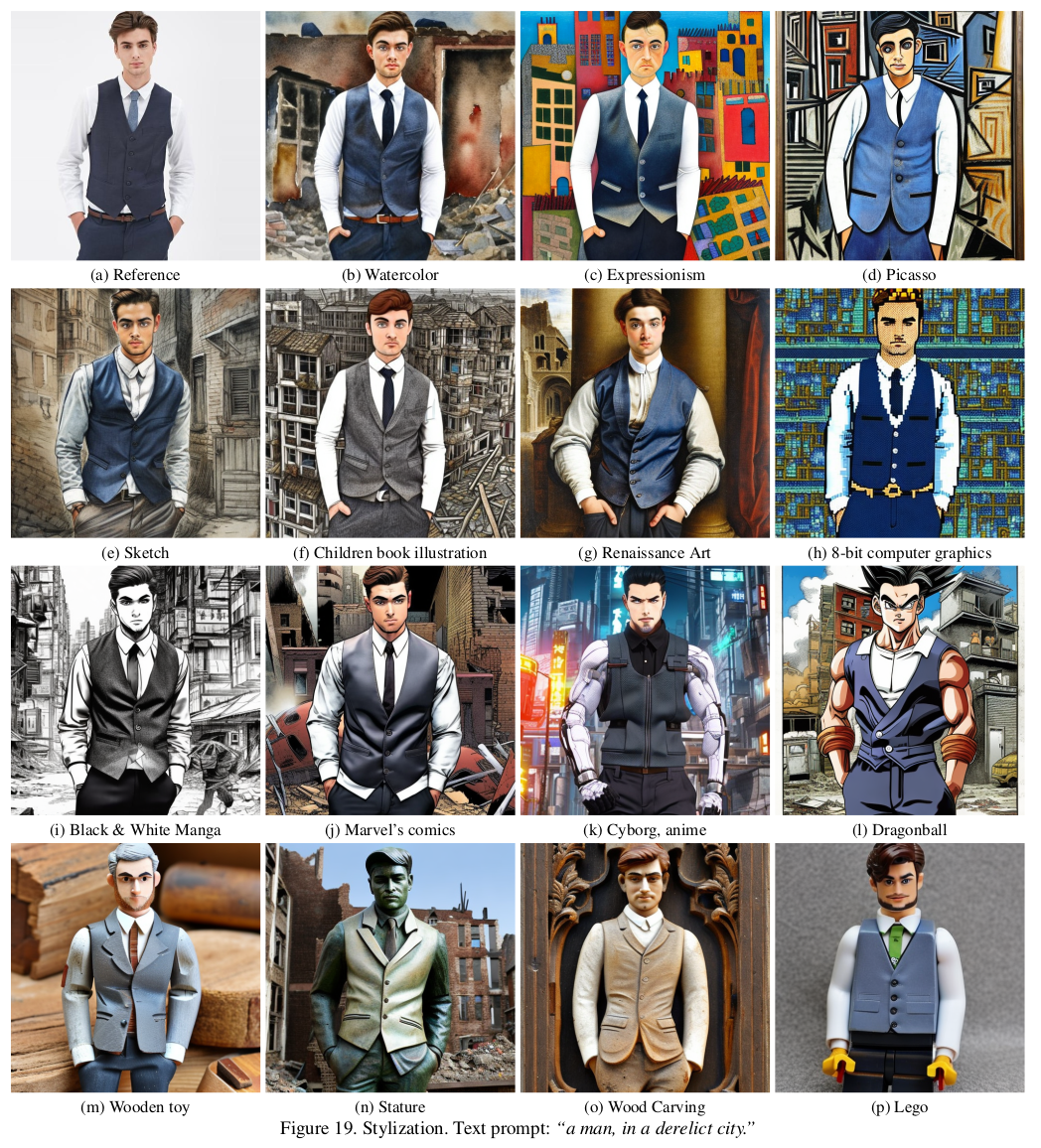

This paper introduces ViscoNet, a novel method that enhances text-to-image human generation models with visual prompting. Unlike existing methods that rely on lengthy text descriptions to control the image structure, ViscoNet allows users to specify the visual appearance of the target object with a reference image. ViscoNet disentangles the object’s appearance from the image background and injects it into a pre-trained latent diffusion model (LDM) model via a ControlNet branch. This way, ViscoNet mitigates the style mode collapse problem and enables precise and flexible visual control. We demonstrate the effectiveness of ViscoNet on human image generation, where it can manipulate visual attributes and artistic styles by adjusting the control strength of visual prompt at different spatial resolutions. We also show that ViscoNet can learn visual conditioning from small and specific object domains while preserving the generative power of the LDM backbone.

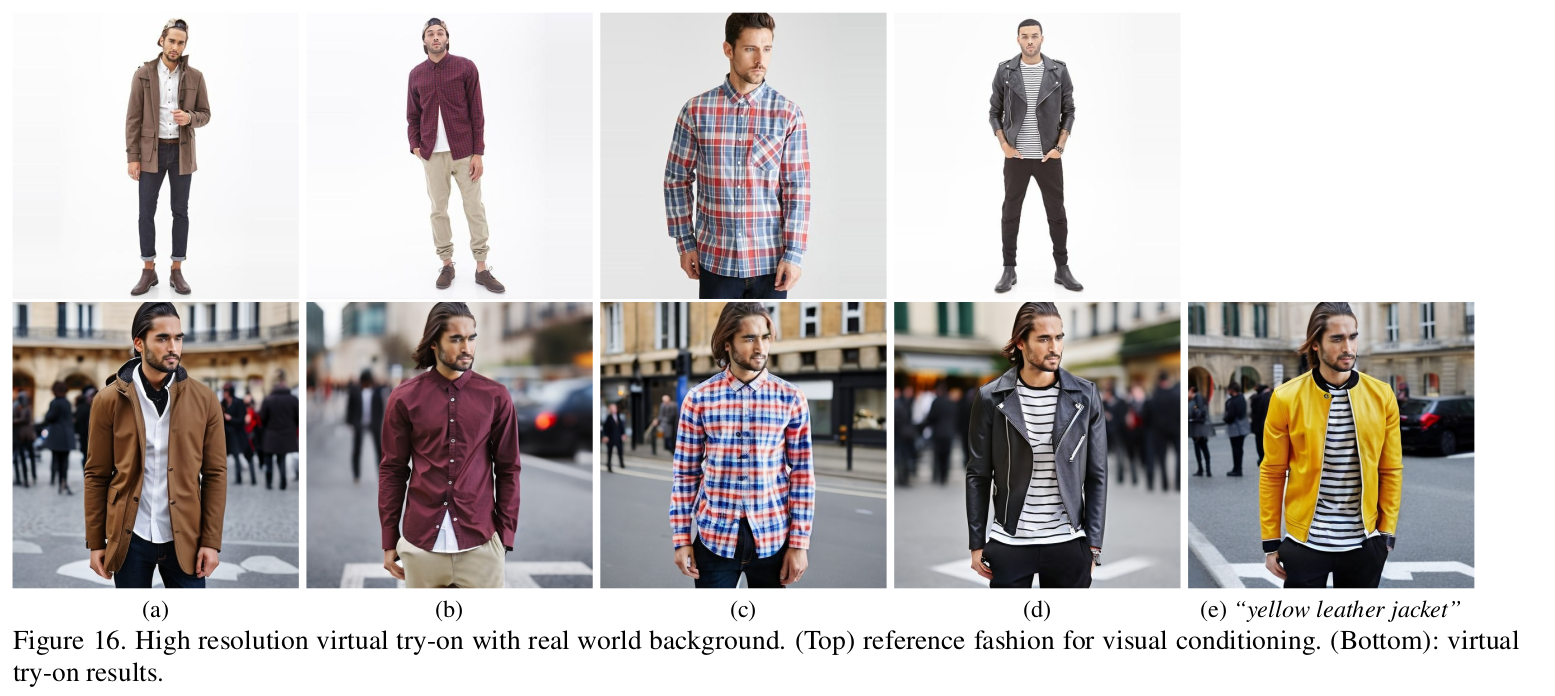

We replaces text embedding in ControlNet with image embedding. This severes the entanglement between ControlNet and backbone LDM (StableDiffusion). We applies human masking to control signals to avoid overfitting LDM with blank background of small training dataset.

Fixing visual prompt and change age in text prompt.

We avoid mode collapse by reducing the visual control signal strength. We remove the face visual prompt in some challenging image styles.

We achieve texture transfer by only applying visual control signals at high spatial resolutions.

@article{cheong2023visconet,

author = {Cheong, Soon Yau and Mustafa, Armin and Gilbert, Andrew},

title = {ViscoNet: Bridging and Harmonizing Visual and Textual Conditioning for ControlNet},

journal = {Arxiv Preprint 2312.03154},

month = {December},

year = {2023}}